ORIGINAL RESEARCH ARTICLE

Fostering postgraduate student engagement: online resources supporting self-directed learning in a diverse cohort

Luciane V. Mello*

School of Life Sciences, University of Liverpool, Liverpool, United Kingdom

Abstract

The research question for this study was: ‘Can the provision of online resources help to engage and motivate students to become self-directed learners?’ This study presents the results of an action research project to answer this question for a postgraduate module at a research-intensive university in the United Kingdom. The analysis of results from the study was conducted dividing the students according to their programme degree – Masters or PhD – and according to their language skills. The study indicated that the online resources embedded in the module were consistently used, and that the measures put in place to support self-directed learning (SDL) were both perceived and valued by the students, irrespective of their programme or native language. Nevertheless, a difference was observed in how students viewed SDL: doctoral students seemed to prefer the approach and were more receptive to it than students pursuing their Masters degree. Some students reported that the SDL activity helped them to achieve more independence than did traditional approaches to teaching. Students who engaged with the online resources were rewarded with higher marks and claimed that they were all the more motivated within the module. Despite the different learning experiences of the diverse cohort, the study found that the blended nature of the course and its resources in support of SDL created a learning environment which positively affected student learning.

Keywords: self-directed learning; online resources; postgraduate teaching; higher education; prior knowledge

Citation: Research in Learning Technology 2016, 24: 29366 - http://dx.doi.org/10.3402/rlt.v24.29366

Responsible Editor: Peter Reed, Laureate Online Education and the University of Liverpool Online, UK.

Copyright: © 2016 L.V. Mello. Research in Learning Technology is the journal of the Association for Learning Technology (ALT), a UK-based professional and scholarly society and membership organisation. ALT is registered charity number 1063519. http://www.alt.ac.uk/. This is an Open Access article distributed under the terms of the Creative Commons Attribution 4.0 International License, allowing third parties to copy and redistribute the material in any medium or format and to remix, transform, and build upon the material for any purpose, even commercially, provided the original work is properly cited and states its license.

Received: 6 August 2015; Accepted: 20 February 2016; Published: 17 March 2016

*Correspondence to: Email: lumello@liverpool.ac.uk

To access the supplementary material to this article, please see Supplementary files under ‘Article Tools‘.

Introduction

Postgraduate cohorts are diversifying as a result of changes in the UK higher education, shifting priorities of universities and changing training requirements of students. Students’ prior knowledge is a key element to their success, particularly in taught modules. The prior knowledge of a diverse cohort naturally varies considerably, so that students’ preparedness for higher-level training in different areas is variable. The proposal explored here is that students will benefit more from, and engage more deeply with, their training if they are supported in the acquisition of any required prior knowledge for the modules.

The research question addressed here was: ‘Can the provision of online resources help to engage and motivate students to become self-directed learners?’ First, self-directed learning (SDL) was explored as one way to support the transition from undergraduate to postgraduate learning. The research subsequently uncovered the perceived value of online learning tools (online tests and podcasts) that facilitated SDL. This study also reports analyses of students’ prior knowledge, motivation for and engagement with learning.

Background

Handelsman et al. (2004) argued that scientific teaching is faithful to the process of discovery which is embedded in science. Science teaching must therefore encompass the critical thinking, rigour and creativity that define research. The objectives are thus to help students improve their thinking skills, and to encourage students to achieve deep learning. Learning based on knowledge acquisition through teacher transmission is therefore less compatible with scientific teaching, where a continuous stimulation of critical thinking about new problems and situations is essential.

Knowles (1975) analysed reasons for adopting an SDL approach, linking SDL with lifelong learning and learning within the education context. Therefore, there is a clear link between SDL and scientific teaching, as to succeed in science, students must engage in learning. They must identify their goals, the strategies to be employed and the methods to be used, and must be able to evaluate their decisions and outcomes. These features are the basis of SDL (Brookfield 1991).

Koohang and Paliszkiewicz (2013) argued that e-learning promotes active learning, which in turn promotes knowledge construction. Chung and Davis (1995) showed that blended learning environments can help students to develop learning responsibilities in time management and searching for learning resources. These findings support a linkage between online resources, active learning and SDL.

In the UK higher education, the provision of further training skills for PhD students involved in STEM subjects (sciences, technology, engineering and mathematics) is a requirement of the Research Councils funding agencies (RCUK). As a result, Masters and PhD students take the same modules at postgraduate (PG) level. For the purpose of this study, in the context of postgraduate teaching, diversity is conceptualised as encompassing various differences in students’ backgrounds. These differences dictate students’ prior knowledge and, consequently, their readiness to acquire new knowledge at postgraduate level.

At the PG level a working knowledge of bioinformatics has become essential for students, as most research projects will generate data that require bioinformatics analysis (Hingamp et al. 2008; Via et al. 2013). After running a bioinformatics module at PG level for 2 years, differences in the students’ prior knowledge became increasingly visible, since the module received students from increasingly diverse undergraduate (UG) backgrounds.

Background information about the module

The module was designed to give a broad overview of bioinformatics to students on a new Masters programme (Advanced Biological Sciences, ABS). Its design was based on the expertise of members of staff in different areas of bioinformatics. The module consisted of a series of 1 hour lectures followed by 2-hour workshops, where the students had the opportunity to learn by doing (Blumenfeld et al. 2006).

From its second year, the module received students from other three Masters programmes, the PG-level MSc and MRes programmes in Post-genomic Sciences (PGS) and the Integrated Masters (IntMaster). Numbers on the latter course were very small (Table 1) but they were in the same M-level year as the MSc and MRes students and so, although IntMaster students are undergraduates, they were combined in overall numbers for this analysis. From the academic year 2011–12, PhD students were required by RCUK to take taught modules as part of their training and, when they too joined the module in that academic year, they represented half of the module’s students (Table 1). Further student diversity resulted from the enrolment in the module of both native British and international students, some English native speakers (ENS), others speaking English as second language (ESL).

Table 1. Size and programme composition of the classes over 4 years of module delivery.

| |

|

Masters ABS |

Masters PGS |

|

|

Language |

| Cohort year |

Total size |

MSc |

MRes |

MSc |

MRes |

IntMaster |

PhD |

ENS |

ESL |

| 2009–10 |

16 |

10 |

2 |

0 |

0 |

0 |

4 |

7 |

9 |

| 2010–11 |

27 |

14 |

5 |

3 |

1 |

3 |

1 |

18 |

9 |

| 2011–12 |

45 |

15 |

5 |

4 |

2 |

1 |

18 |

27 |

18 |

| 2012–13 |

33 |

2 |

6 |

1 |

7 |

1 |

16 |

23 |

10 |

| ABS=advanced biological sciences, ENS=English native speaker, ESL=English second language, PGS=post-genomic sciences. |

Recognising the range of potential in the postgraduate student cohort, delivery was shifted from the ‘traditional’ lecture structure, to a more interactive model, aimed at engaging students better and thereby improving their learning. To achieve this, online resources in the form of podcasts and online tests were introduced, giving the module a blended structure combining face-to-face delivery with e-learning. The goal was to keep students engaged with the next topic to be discussed through an SDL approach. The intervention in the teaching approach was introduced over two consecutive academic years. The purpose of this research was to determine if the provision of online resources would help to engage and motivate students to become SD learners and thereby improve their preparedness for the bioinformatics postgraduate module. When analysing the effect of the intervention, two categories were used: programme of study and language. Entry requirements vary for different postgraduate programmes (usually being higher for PhD students); thus, the first categorisation evaluated if the students’ previously obtained degree classification influenced the degree for which they already had the required prior knowledge for the module, and their subsequent performance. The second category allowed an assessment of whether language could act as a barrier for learning. As cohort sizes were small in the Masters programmes, they were combined with PhD students to obtain meaningful statistics.

Research methods

The study was designed in the form of an action research, described by Ferrance (2000) as ‘a cycle of posing questions, gathering data, reflection, and deciding on a course of action’. Research ethical approval was sought and obtained through the Research Ethics Committee. Statistical analyses were performed using SPSS-21.0 (Nie 1975). Significance was assessed at the p<0.05 level. Likert scale data from the questionnaires were treated as ordinal data, whereas performance data were treated as scale data. Comparisons between Masters and PhD students who completed more or less than 50% of the online tests were assessed using One-way ANOVA, and specific group differences were tested by post-hoc Tukey test. Statistical differences in performance between the groups of students who completed more or less than 50% of the online tests were analysed using t-tests for independent samples. To evaluate whether there were any significant differences between the answers of students from different programmes or with different English language background the Mann–Whitney U test was chosen.

The main study: methods and interventions

The interventions: SDL and online resources

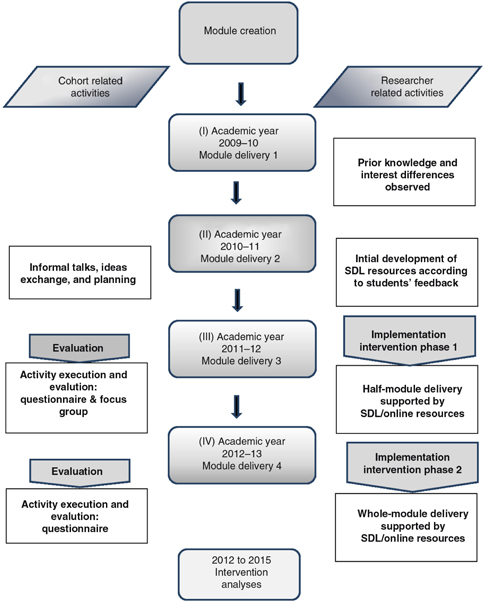

This work covers a period of 4 years, from academic session 2009–10, when the module was delivered for the first time, to 2012–13, its fourth year (Figure 1). The problems relating to student diversity were first identified in the session 2010–11. Staff responses, intervention phases 1 and 2, followed in 2011–12 and 2012–13, respectively. In intervention phase 1, half of the face-to-face module activities were complemented by online resources activities (ORAs). Intervention phase 2 saw the online activities extended to the whole module (Table 2).

Figure 1.

Diagram illustrating the action research project planning, execution and evaluation in a chronological manner: (I) module delivery 1, just after module creation; (II) module delivery 2, when the problem identification and first thoughts occurred; and module deliveries 3 (III) and 4 (IV), the two intervention’s phases.

Table 2. Module structure.

| |

|

|

|

SDL/online resources |

| Academic year |

Intervention |

Number of lectures (1 hour) |

Number of workshops (2 hours) |

Podcast |

Test |

| 2011–12 |

Phase 1 |

8 |

11 |

7 |

5 |

| 2012–13 |

Phase 2 |

8 |

11 |

11 |

9 |

The interventions implemented centred on enhancement of SDL and considered three key aspects: resources, formal support and clarity of expectation. Resources, additional to the core module elements of face-to-face lectures and workshops, were provided in the form of podcasts and online tests. Formal support was provided in an additional computer skills workshop in the first week of the module, ensuring that lack of IT skills would not hold back students’ learning. Regarding expectations, students received information about the online resources in the first lecture of the course, and were made aware of their own responsibility for their learning. This message was regularly reinforced during the course, reflecting the claim of Volery and Lord (2000) that ‘the level of interaction between the students and the lecturer appears predominant in online delivery’. A brief explanation of SDL was given to the students, discussing staff expectations and the reasons behind the introduction of the online resources: student diversity and variable prior knowledge. The online resources were directly connected with the lecture and workshop material, as explained below.

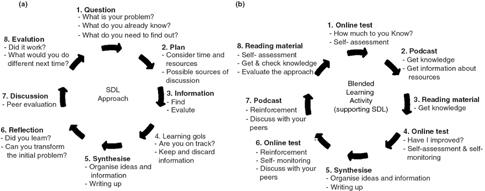

As students’ prior knowledge can dictate their readiness to learn new material, the expectation was that through SDL the students would have the opportunity to achieve the required ‘starting point’ for each session in the module. Figure 2a illustrates the questions which helped in the design of the blended activity to support SDL (Figure 2b). The activity is described in detail in the next section.

Figure 2.

(a) The key questions designed to support an SDL approach. (b) The blended learning activity designed to support SDL.

The interventions: blended learning activity

The ORA consisted of podcasts and online tests, complemented by reading material (Figure 2b). The resources were made available to students a week before a module session (lecture plus workshop). In addition, students had access to lecture slides and workshop handouts in advance through the university’s virtual learning environment (Blackboard).

Podcasts were used to explain the theory (lecture) and exercises (workshop), drawing students’ attention to the prior knowledge required, and suggesting pre-reading. According to Clark, Taylor, and Westcott (2012) ‘podcasting offers one means for the lecturer to support all members of the unit of study’s student population by supporting less experienced participants’. Test questions varied from ‘testing prior knowledge’ to ‘testing new knowledge’, obtained from the podcasts and reading material. Formative feedback for the tests was provided, indicating the correctly given answers and the total final scores. For incorrect answers, students were guided to resources where the correct answer could be found, encouraging further reading. The same test was made available to the students after the session, with correct answers provided. In this way, the test was used not only as a formative assessment but also for feedback and self-monitoring (Sadler 1989). Overall, this blended delivery model aimed to foster knowledge transformation by keeping students engaged with the next topic to be discussed.

The first intervention was a trial, covering half the module (Table 2), and occurred during module delivery 3 (Figure 1). In the first lecture of the module, students were made aware of the blended activity, and of the expectation that the online resources would be used by them for catch-up and/or reinforcement. The desirability of completion of the ORA before the face-to-face sessions was emphasised to students, with the explanation that it would help prepare them to acquire new knowledge. Students were told that the online resources would also be available after the face-to-face sessions so that tests could be retaken and used for self-monitoring.

Intervention phase 2 occurred in module delivery 4 (Figure 1) and covered almost the whole module. Six members of staff were involved in delivery of the module, five of whom participated in the preparation of the ORA in phase 2. Supplementary file describes the learning activities of the interventions in detail.

The main study: methods and evaluation of the outcome

Evaluation of this study aimed to assess if and how the students used the online resources offered in the module, if they found the resources beneficial to their learning experience and if the structure adopted in this study supported SDL. A questionnaire and a focus group were used to collect student feedback after intervention phase 1, and a questionnaire only after phase 2. In addition, students were invited to comment on the module in a school-wide end-of-module evaluation. A structured focus group was used, allowing students to freely discuss the online resources, and encouraging them to think about SDL. Free-text comments from questionnaires as well as the focus group transcript were analysed using thematic analysis (Braun and Clarke 2006).

Research findings

Students’ evaluation of the intervention phase 1

Questionnaires analysis

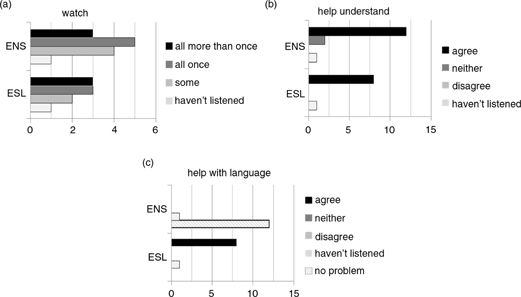

Since Blackboard proved incapable of recording whether students listened partially or fully to podcasts, podcast results are based on the half of the class who replied to the questionnaire designed to evaluate the intervention. Of all ENS and ESL students, 54 and 43%, respectively, participated in the survey.

For the online tests, a full analysis of students’ access, marks and number of attempts for each test was performed via Blackboard. Students’ perception of the influence of the tests on their learning was assessed using the questionnaire responses and compared with data from Blackboard.

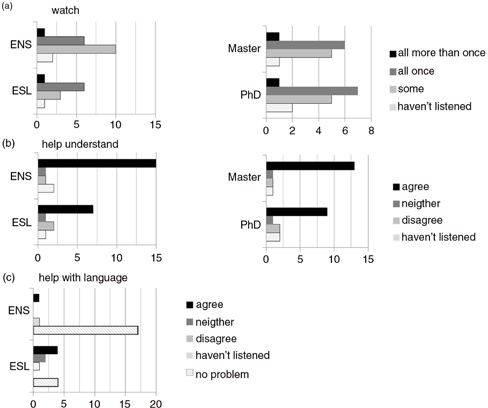

Figure 3a shows that 62% (ENS) and 67% (ESL) of the students watched all the podcasts, revealing no significant difference between the groups (p=0.944, z=−0.070). Figure 3b shows a positive student perception of the effect of the podcasts on their lecture and workshop learning, irrespective of whether English was their first or second language (p=0.823, z=−0.224). Although naturally varying in their English abilities, all ESL students who listened to the podcasts claimed that the podcasts helped with the ‘language issue’ (Figure 3c).

Figure 3.

Students’ views on the podcasts (cohort 2011–12). Number of students from each group (ENS, English native speaker; ESL, English as second language) who (a) watched the podcasts; (b) level of agreement with the questionnaire statement ‘the podcast helped with lecture and workshop content understanding…’; (c) level of agreement with the questionnaire statement ‘the podcast helped with my language issue…’. Total number of students=22, ENS=13, ESL=9; Likert scale.

Except for Test3 and Test5, the majority of the students attempted the tests and most, 64%, completed them all (Table 3). Test5 was released in the same week as a summative assessment for a compulsory module, possibly explaining its low use. All students who responded to the questionnaire said the tests helped them with self-assessment, irrespective of their language background.

Table 3. Online tests 2011–12.

| Online test |

OT1 |

OT2 |

OT3 |

OT4 |

OT5 |

Mean±SD |

| Not finished |

30 |

11 |

8 |

17 |

7 |

– |

| Completed |

37 |

65 |

44 |

70 |

26 |

– |

| Total |

67 |

76 |

52 |

87 |

33 |

63±29 |

| Percentage of students who attempted each online test (OT) with or without completion. |

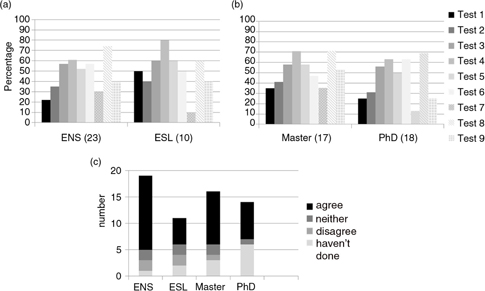

Figure 4 indicates the percentage of students in each category – language and programme – to take each test. No significant difference was found between ENS and ESL (t-test: t30,9=0.26, p=0.80) or between Masters and PhD cohorts (t-test: t42,8=0.53, p=0.60). The degrees of Masters students can be awarded with ‘distinction’ or ‘merit’, depending on mark criteria, while marks are not recorded on the PhD certificate. Therefore, if the students were only concerned about their marks, we might expect that Masters students would engage more with the online resources, aiming to achieve better marks, but this was not the case. In addition, all students who did the tests agreed that they helped with their performance in assessments, irrespective of their language background or programme degree.

Figure 4.

Students’ attempts at online tests (cohort 2011–12). Percentage of students from each category: (a) ENS (English native speaker) and ESL (English as second language); and (b) Masters and PhD students who took each of the five online tests. N=the number of students in each group is indicated in parentheses.

The tests could also be taken after the face-to-face interaction, for self-assessment and self-monitoring. All students agreed with the statements ‘the online tests helped me with self-assessment’ indicating a positive use of the tests for self-assessment. The self-monitoring aspect was quantitated as the number of times the students took the tests and when. Ideally students would take the tests at least twice, as proposed in the blended activity cycle (Figure 2) monitoring their own progress. However, analysis showed that few students took the tests both before and after the face-to-face sessions. Evidently, the test feedback provided the students with an opportunity for self-assessment, but few took the opportunity to self-monitor their progress. Subsequently, many students highlighted their heavy PG timetable as the reason for not revisiting the tests, rather than a lack of interest in self-monitoring. Students are more likely to engage with summative than formative assessment (Voelkel 2013); therefore, making the tests scores part of the module marks would increase the number of students revisiting the tests. However, this would go against the aim of the ORA in this module which was to provide students with an optional ‘extra’ learning activity. Nevertheless, self-monitoring is directly involved in the process of SDL (Brookfield 1991; Knowles 1975), and a further study could investigate the lack of engagement with self-monitoring reported herein. However, it is possible that students used their marks on the continuous summative assessments to evaluate their own learning.

Forty-one per cent of the students replied to the module evaluation questionnaire. Responses to the open questions from both questionnaires show students’ positive comments reinforcing the findings from the quantitative analysis. Typical comments are presented below:

E-learning activities should be provided for all modules. As a part-time student they were particularly useful for when I had to miss lectures due to work. I would have used all the podcasts/online tests if I’d have had enough time. (Student 1)

I felt that the e-learning activities were very useful and made the learning process much easier, especially for someone who has not come across many of these topics and subjects before. (Student 2)

This method provides confidence to those who struggle to adapt to a more self-directed learning strategy, whilst creating an ideal environment for those who relish their independence. (Student 3)

Focus group analysis

The main aim of the focus group was to evaluate students’ views of the online resources with respect to important features of SDL. Eleven students voluntarily attended the session, four MSc, five MRes and four PhD. The focus group discussion revealed students’ clear understanding of the activity, flexibility in the way it was used (depending on the student’s needs and available time), enhancement of the students’ perception of learning and appreciation of the tutor support and time dedicated towards the implementation of SDL (Table 4). Thus, it is fair to conclude that important SDL features were achieved in the new blended learning structure of the module and appreciated by the students.

Table 4. Students’ views on the blended activity regarding SDL.

| Students’ view |

SDL features |

| Students generally understood the structure for SDL – pre-lecture podcasts to introduce new topics/support they could expect from the tutor. |

Structure |

| Different students used the SDL resources in different ways – students new to topics found it a good starting point/PhD students used it as a check on topics they had already learnt/other students used references in reading to deepen topics they were interested in. |

Flexibility |

| Not all students used all of the SDL resources all the time – if time was short they sometimes did not use them. |

Flexibility |

| Most of the students found the structure and process supportive for their learning – none thought it to be too structured or under-structured. |

Enhance learning |

| Many commented that they would like more staff to adopt a similar approach but appreciated that it must take a lot of effort on the tutor’s part to set them up. |

Enhance learning |

| They valued and appreciated the apparent effort put in by the tutor and her responsiveness to problems or questions they were struggling with from the SDL resources. |

Teacher as facilitator |

| Most used the online tests as a revision tool for assessments and appreciated the immediate feedback for self-assessment. |

Structure and teacher as facilitator |

Students’ evaluation of the intervention phase_2

Thirty students out of 33 (91%) replied to the questionnaire designed to evaluate the intervention. Ninety-four per cent of all Masters and 88% of all PhD students responded to the questionnaire. Grouped by language, 86% of all ENS and 100% of the ESL students responded. For analysis using data from Blackboard, all students were included.

Figure 5a shows that 37% (ENS) and 70% (ESL) of the students watched all the podcasts. Including students who watched only some of the podcasts, these figures increase to 89 and 91%, respectively. As in intervention phase 1, students considered that the podcasts had a positive impact on their understanding of lectures and workshops, irrespective of language and programme differences (ENS–ESL: p=0.42, z=−0.803; and Masters–PhD: p= 0.29; z=−0.907) (Figure 5b). However, a difference was seen with regard to the question of whether the podcasts helped with the language issue (Figure 5c). Some of the ESL claimed not to have any language problem while one ENS claimed the podcast has helped them regarding language issue. This particular student may have referred to problems regarding subject-specific jargon, or in comprehending the spoken words of a foreign staff member.

Figure 5.

Students’ views on the podcasts (cohort 2012–13). Number of students from each category: programme and language who (a) watched the podcasts; (b) level of agreement with the questionnaire statement ‘the podcast helped with lecture and workshop content understanding…’; (c) level of agreement with the questionnaire statement ‘the podcast helped with my language issue…’. Total number of students=30, ENS=19, ESL=11; Masters=16, PhD=14; Likert scale. ENS: English native speaker; ESL: English as second language.

Table 5 summarises student attempts of the tests. Test1 and Test2 show a low percentage of test completions. From Test3 an increased usage of the tests was observed (with the exception of Test7 where students reported a clash with a summative assessment in the same week). Figure 6a and b show the percentages of students in each category, language and programme, to take each test. As in phase 1, no statistically significant difference was found between ENS and ESL (t-test: t17,11=−0.74, p=0.47) or between Masters and PhD students (t-test: t29,10=0.66, p=0.52), indicating they used the tests with similar frequency.

Figure 6.

Students’ attempts at online tests (cohort 2012–13). Percentage of students from each category: (a) ENS (English native speaker) and ESL (English as second language); and (b) Masters and PhD students who took each of the five online tests. N=the number of students in each group is indicated in parentheses. (c) Number of students from each language and degree groups who agreed with the questionnaire statement ‘the online test helped with my self-assessment…’

Table 5. Online tests 2012–13.

| Online test |

OT1 |

OT2 |

OT3 |

OT4 |

OT5 |

OT6 |

OT7 |

OT8 |

OT9 |

Mean/SD |

| Not finished |

6 |

9 |

9 |

6 |

0 |

6 |

9 |

13 |

9 |

– |

| Completed |

25 |

28 |

50 |

59 |

56 |

47 |

15 |

59 |

31 |

– |

| Total |

31 |

37 |

59 |

65 |

56 |

53 |

24 |

72 |

40 |

50±30 |

| Percentage of students who attempted each online test (OT) with or without completion. |

ENS students (Masters or PhD) who responded to the questionnaire said the tests helped them with self-assessment. For the group ESL, the difference between those who agreed with the statement and those who either disagreed or had no opinion is not as large as for the other groups (Figure 6c).

The self-monitoring analysis, based on the number of times the students took the tests and when, revealed that very few students took them both before and after face-to-face sessions. This confirms the previous interpretation: students used the tests for self-assessment, but few took the opportunity to self-monitor their progress. Students commented favourably on the immediate feedback from the tests. This exercise revealed that students can value formative feedback as a means of engagement rather than being simply driven by grades, as commonly reported (Nicol and Macfarlane-Dick 2006). This finding is supported by Higgins, Hartley, and Skelton (2002) in their 3-year study showing that formative assessment feedback is essential to encourage deep learning. Overall, the tests, as in the previous year, clearly positively affected students’ perception of their learning.

Fifty-two per cent of the students completed the final module evaluation. Students’ comments reinforced the findings from the survey and highlighted other positive aspects of the blended structure that had not previously been considered:

As a dyslexic PhD student, your blended course removed the barriers found with traditional styles of teaching and made the material accessible and understandable not just for myself but for all students of all abilities.

Table 6 shows the students’ opinion of SDL. Ninety-seven per cent claimed they understood the meaning of SDL. Of those, 50% of Masters students said they liked it, against 77% of the PhD students. Bhat, Rajashekar, and Kamath (2007) in their work with first-year medical students claimed that students have a mix of abilities and questioned if all students would benefit from SDL. The authors compared the marks achieved by students from SDL and didactic lectures. The SDL activity consisted of individual research on a specific topic, followed by presentation and discussion. Results showed that students achieving good results with didactic lectures scored even higher marks in SDL topics. However, for lower achievers no significant difference in marks was observed. If this study implies a correlation between academic level and readiness for SDL, then we would expect that higher performing students would appreciate the SDL approach more than others. Indeed, this suggestion is in agreement with the higher appreciation of SDL by PhD students (generally first class UG degrees) than by Masters students (a mix including students achieving a 2.2 – according to the UK degree classification). Inventories were not used to measure SDL readiness, as this would have required the students to fill in another long questionnaire.

Table 6. Students’ view of SDL (cohort 2012–13).

| |

Know and like |

Know and do not like |

No meaning |

| Masters |

8 (50%) |

7 (44%) |

1 (6%) |

| PhD |

10 (77%) |

3 (23%) |

0 (0%) |

| Know and like=the student knows the meaning of SDL and likes the approach; know and do not like=the student knows the meaning of SDL but does not like the approach; no meaning=the student does not know the meaning of SDL. |

Although some students, particularly MSc students, were not positive about SDL, the same students could perceive the advantages of ORA and, indeed, requested that other modules follow suit and provide them. This contradiction perhaps suggests that students did not fully appreciate the connection between the two.

As in the focus group evaluation of the 2011–12 cohort, students said SDL activities should be supported and structured, as advocated by Knowles (1975). But comments also showed that students prefer a combination of SDL and face-to-face activities to SDL only. Overall, students’ satisfaction with the ORA was clear and they requested that similar activities should be provided in other modules.

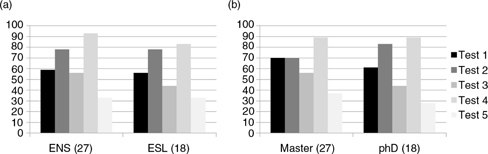

Did the blended activity influence students’ performance?

This section evaluates whether the blended structure of the module truly had any tangible influence on the students’ marks, or if students merely perceived that it had helped them to learn. For both years, an in-year comparison between Masters and PhD students was done, dividing students into those who had completed more or less than 50% of the tests. Statistical tests showed no significant difference between the four groups: for the group who completed >50% of the tests in 2011–12, the Tukey p-value was 0.999. For the 2011–12 <50% group it was 0.628, and the corresponding 2012–13 values were 0.999 and 0.885. Masters and PhD students were therefore pooled within each year, after which a significant difference in performance was seen between those who took more or less than 50% of the tests. For the 2011–12 cohort, the average final marks of the module were 69±7.2 and 75±6.0, for students who took <50 or >50%, respectively (t-test: t40,2=−3.044, p=0.004). For the 2012–13 cohort the corresponding mean marks were 67±11.0 and 74±5.0, respectively (t-test: t27,3=−2.079, p=0.041). However, it is unlikely that the tests were the only influence on students’ marks. Analysis of the questionnaire showed a correlation between the use of online tests and podcasts: most students used either both or neither. Thus, it is perhaps more likely that the students who engaged more assiduously with the module in general were rewarded by higher marks. Importantly, as the online tests were mainly designed to tell the students what to study for the next session, and no marks were attached to them, any difference in performance deriving from their use can be credited to student engagement with the SDL approach. The results of Kibble (2007) also showed a significantly better exam performance in students who participated in voluntary, formative online quizzes, compared with those who did not. Interestingly, no significant difference was found between the module final marks of Masters and PhD students. However, this finding should be treated with caution, due to the relatively small sample size available in this study. Unfortunately, in the domain of biological sciences, large postgraduate classes, particularly with mixed programme cohorts, are rare making further analysis difficult. An extensive literature search revealed no similar published studies in other subject areas, emphasising the gap in the literature regarding postgraduate mixed programme cohorts.

For the podcasts, although their direct impact on students’ marks cannot be measured, the findings of this study suggest an increase in students’ perception of learning. The work of Clark, Taylor, and Westcott (2012) with postgraduate students also showed that students perceived learning benefits from podcasts when they were introduced to address students’ prior knowledge. Price et al. (2006) advocate that short podcasts are ‘within the attention span of an audience’, an aspect that could contribute to the higher use also observed in other studies (Kukulska-Hulme 2012; Morrissey 2012).

Discussion and conclusion

This study analysed whether the provision of online resources could help to engage and motivate students to become self-directed learners. Sharpe et al. (2006) highlighted that the use of online resources as a way to provide immediate feedback helps with students’ engagement in out-of-class activities. Analysis of the interventions revealed that the majority of students from the two cohorts used the resources and that their perception of a positive impact on their learning was accompanied by a tangible increase in their actual module marks as well as a reported higher motivation for the module. This result partially contradicts Laird and Kuh’s study (2005) that showed a strong positive relationship between the use of online resources and students’ engagement with active learning, despite the lack of a corresponding increase in marks.

There was an expectation that different programme cohorts would make different use of the online activities. However, no difference was found in the use of resources between Masters students, who can be awarded a final grade of ‘distinction’ or ‘merit’ and doctoral students, who may only pass or fail. Furthermore, there was no difference in usage between ENS and ESL student groups, indicating that the resources embedded in the module were consistently used irrespective of student’s programme or language skills.

The blended approach made available to support SDL was perceived and valued by all students. Nevertheless, a difference was observed in how students perceive SDL: doctoral students seemed to prefer the approach and were more receptive to it than Masters students. Some students reported that the SDL activity helped them to achieve more independence compared with traditional approaches to teaching. Apparently, the new activities implemented in this module contributed to the creation of a learning environment that not all students had necessarily experienced before. Recognising this, different appreciation of the learning tools by students is only to be expected, as not all students welcome changes in their learning environment irrespective of their beneficial effect (Entwistle and Peterson 2004).

Self-monitoring is a key feature of SDL, and lack of familiarity with SDL could in turn explain students’ non-familiarity with self-monitoring. The use of online resources to support and enhance student learning and assessment is well documented in the literature. However, in terms of the use of the same online test to support and enhance self-monitoring, we found the literature to be limited, particularly when the test does not contribute to module marks.

This study examined just one model of SDL: active learning through the use of online resources. Although students appreciated the design of the SDL activities, a limitation of this study was the lack of an initial assessment of students’ prior experience of SDL. Thus, the extent to which students developed their SDL skills cannot be quantified. One question that remains is whether doctoral students are indeed better prepared for an SDL approach through having the required skills. Positive correlations between the SDL readiness scale (SDLRS, Guglielmino 1978) scores and academic performance are described by Ting (1996) and Shokar et al. (2002). In contrast, Nepal and Stewart (2010) and Abraham et al. (2011) report no correspondence between SDL readiness and grade point average (GPA). The latter highlighted that although higher achievers presented high scores in the self-control category of the SDLRS scale, all students seemed to require support in their self-management skills. This study provides further evidence that students require structured support prior to commencing SDL activities, until they achieve a greater degree of independence. In this regard, students’ satisfaction with the structure adopted in this study can be taken as a positive reflection of the care that went into the provision of that support.

References

Abraham, R. R., et al., (2011)

‘Exploring first-year undergraduate medical students’ self-directed learning readiness to physiology’, Advances in Physiology Education,

vol. 35, no. 4,

pp. 393–395.

PubMed Abstract | Publisher Full Text

Bhat, P. P.,

Rajashekar, B.

&

Kamath, U. (2007)

‘Perspectives on self-directed learning – The importance of attitudes and skills’, Bioscience Education, vol. 10, [Online] Available at: http://www.heacademy.ac.uk/sites/default/files/beej.10.c3.pdf

Blumenfeld, P. C.,

et al., (2006) ‘Learning with peers: from small group cooperation to collaborative communities’, Educational Research, vol. 25, no. 8, pp. 37–40.

Publisher Full Text

Braun, V. & Clarke, V. (2006)

‘Using thematic analysis in psychology’, Qualitative Research in Psychology,

vol. 3, no. 2,

pp. 77–102.

Publisher Full Text

Brookfield,

S. (1991) Developing Critical Thinkers: Challenging Adults to Explore Alternative Ways of Thinking and Acting, Jossey-Bass, San Francisco, CA.

Chung, J.

&

Davies, I. K. (1995)

‘An instructional theory for learner control: revisited’, [online] Available at: http://www.editlib.org/p/78439

Clark, S.,

Taylor, L.

&

Westcott, M. (2012)

‘Using short podcasts to reinforce lectures’, Proceedings of The Australian Conference on Science and Mathematics Education,

Australia

Sydney, pp. 22–27.

Entwistle, N. J. & Peterson, E. R. (2004)

‘Conceptions of learning and knowledge in higher education: relationships with study behaviour and influences of learning environments’, International Journal of Educational Research,

vol. 41, no. 6,

pp. 407–428.

Publisher Full Text

Ferrance, E. (2000)

‘Action research’, The Education Alliance, [online] Available at: http://www.brown.edu/academics/education-alliance/sites/brown.edu.academics.education-alliance/files/publications/act_research.pdf

Guglielmino,

L. M. (1978)

‘Development of the self-directed learning readiness scale’, Dissertation Abstracts International,

vol. 38, no. 11–A,

pp. 6567.

Handelsman, J., et al., (2004)

‘Scientific teaching’, Science,

vol. 304,

pp. 521–522.

PubMed Abstract | Publisher Full Text

Higgins, R., Hartley, P. & Skelton, A. (2002)

‘The conscientious consumer: reconsidering the role of assessment feedback in student learning’, Studies in Higher Education,

vol. 27, no. 1,

pp. 53–64.

Publisher Full Text

Hingamp, P., et al., (2008)

‘Metagenome annotation using a distributed grid of undergraduate students’, PLoS Biology,

vol. 6, no. 11,

pp. 2362–2367.

Publisher Full Text

Kibble,

J. (2007)

‘Use of unsupervised online quizzes as a formative assessment in a medical physiology course: effects of incentives on student participation and performance’, Advances in Physiology Education,

vol. 31, no. 3,

pp. 253–260.

PubMed Abstract | Publisher Full Text

Knowles,

M. (1975) Self-Directed Learning: A Guide for Learners and Teachers, Association Press, New York.

Koohang, A. & Paliszkiewicz, J. (2013)

‘Knowledge construction in E-learning: an empirical validation of an active learning model’, Journal of Computer Information Systems,

vol. 53, no. 3,

pp. 109–114.

Kukulska-Hulme,

A. (2012)

‘How should the higher education workforce adapt to advancements in technology for teaching and learning?’, The Internet and Higher Education,

vol. 15, no. 4,

pp. 247–254.

Publisher Full Text

Laird, T.F. N. & Kuh, G.D. (2005)

‘Student Experiences with Information Technology and their Relationship to Other Aspects of Student Engagement’, Research in Higher Education,

vol. 46, no. 2,

pp. 211–233.

Publisher Full Text

Morrissey,

J. (2012)

‘Podcast steering of independent learning in higher’, AISHE-J,

vol. 4, no. 1,

pp. 1–9.

Nepal, K. P.

&

Stewart, R. A. (2010)

‘Relationship between self-directed learning readiness factors and learning outcomes in third year project-based engineering design course’, 21st Annual Conference for the Australasian Association for Engineering Education,

Sydney,

Australia, pp. 496–503.

Nicol, D. J. & Macfarlane-Dick, D. (2006)

‘Formative assessment and self-regulated learning: a model and seven principles of good feedback practice’, Studies in Higher Education,

vol. 31, no. 2,

pp. 199–218.

Publisher Full Text

Nie,

N. H. (1975) SPSS: Statistical Package for the Social Sciences, McGraw-Hill, New York.

Price, A.,

et al., (2006)

‘A history and informal assessment of the Slacker Astronomy podcast’, Astronomy Education Review, [online] Available at: http://arxiv.org/ftp/astro-ph/papers/0606/0606326.pdf

Sadler,

D. R. (1989)

‘Formative assessment and the design of instructional systems’, Institutional Science,

vol. 18,

pp. 119–144.

Publisher Full Text

Sharpe, R., Benfield, G., Roberts, G. & Francis, R. (2006) The undergraduate experience of blended e-learning: a review of UK literature and practice, Higher Education Academy, London.

Shokar, G. S., et al., (2002)

‘Self-directed learning: looking at outcomes with medical students’, Family Medicine,

vol. 34, no. 3,

pp. 197–200.

PubMed Abstract

Ting, T. M. (1996) The Relationship between the Self-Directed Learning Readiness, Attitudes towards Computers and Computer Competencies. Master’s Thesis,

National Ching Cheng University,

Taiwan.

Via, A.,

et al., (2013) ‘Best practices in bioinformatics training for life scientists’, Briefings in Bioinformatics, vol. 14, no. 5, pp. 528–537.

PubMed Abstract | PubMed Central Full Text | Publisher Full Text

Voelkel,

S. (2013)

‘Combining the formative with the summative: the development of a two-stage-online test to encourage engagement and provide personal feedback in large classes’, Research in Learning Technology,

vol. 21, no. 1,

pp. 19153.

Volery, T. & Lord, D. (2000)

‘Critical success factors in online education’, The International Journal of Educational Management,

vol. 4, no. 5,

pp. 216–223.

Publisher Full Text